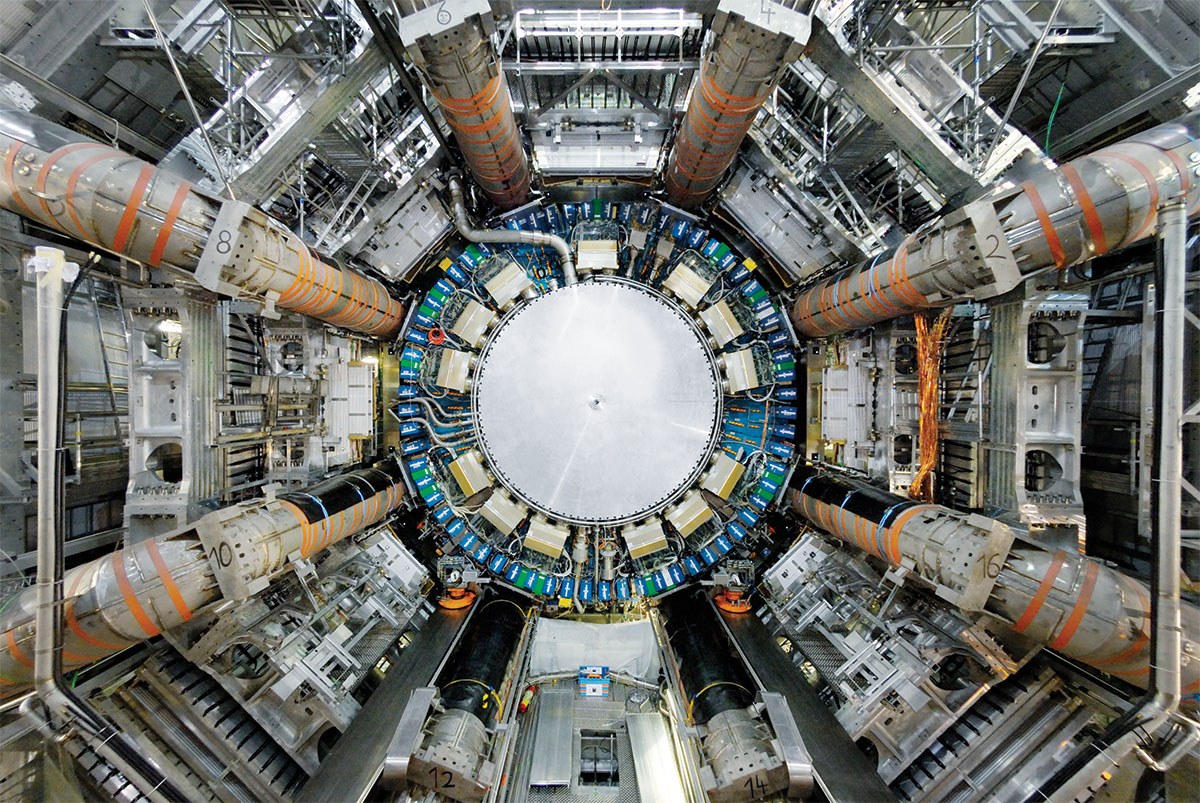

Big Bang. Bigger Data. At the CERN, physicists are looking for nothing less but the fundamental principles of the universe. studiomonaco looks for the data behind it.

Here, they trace the origin of the world, they simulate the Big Bang: they are doing it with the „Large Hadron Collider“, the world’s largest and most powerful particle accelerator – a 27-kilometre ring of superconducting magnets and a number of accelerating structures to boost the energy of the particles along the way.

Collisions, that generate petabyte of data. Per second.

Physicists at CERN’s data centre in Meyrin, the heart of the labs infrastructure, sift through these petabytes,

mostly done in real-time by running complex algorithms to achieve structured data. But even after filtering out almost 99 % of it, CERN expected to gather around 50 to 70 petabytes of data in 2018. A rather conservative estimate, as at the end of the year it was 80 petabytes of data on tape.

⟶ PDF Big Bang. Bigger Data.

Today it is all about collecting the data, reduce it as much as possible, and analyze offline what’s left – the future experiments have to process much more data much faster in real time. That’s why the scientists at CERN are trying to understand how advanced applications can fill the gap as the trend of collecting information from sensors, wearable devices, machines and the need to analyze that massive amount of information in real time is increasing.

„Listening to the data“

Means to find and organize specific patterns from raw data-sets while discarding everything else that is too „noisy“.

Uh. Ah.

And that’s how it sounds like: